AI Creates New Urgency for Datacenter Optics

Optical networking is on the agenda at the OFC 2025 conference in San Francisco this week, and exhibiting companies are looking to promote optics as key to the networking of AI datacenters.

AI networks, particularly those of hyperscalers, have increased demands on power, speed, and space. While copper connectivity remains the standard way to link rack-level elements, to connect AI clusters together within or between datacenters, vendors are looking toward fiber.

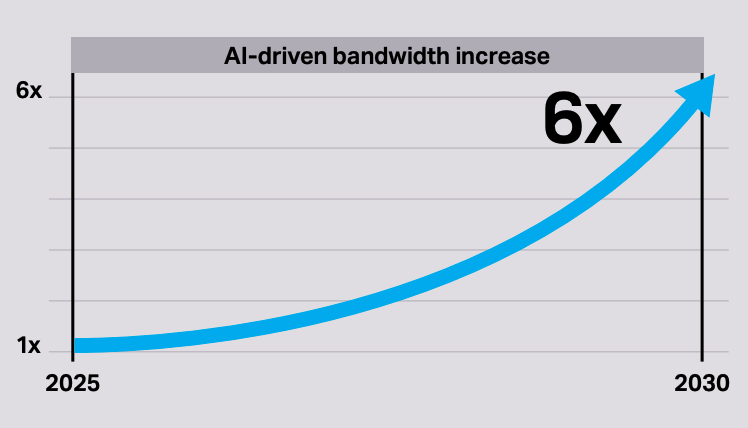

In a recent Censuswide survey commissioned by Ciena, over 1,300 datacenter decision-makers worldwide predicted that datacenter interconnect (DCI) bandwidth will grow by six times what it is today by the end of the decade. Further, 67% of those surveyed plan to enlist managed optical fiber services to link multiple datacenters—43% of which will be dedicated to AI. The remaining 33% of respondents plan to buy dark fiber to link these datacenters. And 87% of those surveyed believe rates of at least 800-Gb/s will be needed for DCI.

Source: Censuswide and Ciena’s Global Survey of Data Center Decision Makers

At OFC, Ciena is showing off technologies it’s developed from longstanding experience in long-haul optics to fit emerging AI datacenter needs for lower power and higher speeds and capacity. In a recent blog, Brodie Gage, Ciena's SVP, Global Products & Supply Chain, stated:

“As we look forward, the rapid expansion of cloud services and the exponential growth of artificial intelligence (AI) applications have led to soaring traffic growth, beyond the typical 30% annual growth rate.... We are expanding our solutions not only to address connectivity needs for thousands of kilometers but also across a data center campus and inside the data center itself—something we like to call “in and around the data center.”

On display at OFC is Ciena’s 1.6T Coherent-Lite pluggable transceiver for the vendor’s DCI switching platforms. The new solution uses coherent optics, or technology that manipulates the physical properties of light to fit more data over a fiber optic link. It reduces size and power loss while increasing efficiency in linking datacenters and campus networks.

Ciena isn’t stopping its AI datacenter innovations at DCI. “We are planning to leverage our expertise in mixed signal design and packaging to introduce optimized 224G and 448G SerDes chiplets,” Gage wrote in his blog. “These technologies provide industry-leading performance for use across both scale up and scale out AI driven applications, such as embedded xPU, xPO engines and AECs.”

More Optics on the Horizon

The focus on fiber, even if it’s a few months or years out, brings into focus a major issue: Optical-to-electrical transceivers linking datacenter equipment with fiber networks can quickly multiply cost, power, and complexity as AI networks grow. While Ciena is focused on improving the size and performance of components in its transceivers, NVIDIA and Broadcom are looking toward co-packaged optics (CPO), a technique that replaces optical-to-electronic transceivers with silicon photonics placed directly on the same substrate as the ASIC associated with the CPU or GPU.

In a demonstration at NVIDIA’s recent GTC conference, the company’s CEO Jensen Huang made it clear that legacy pluggable transceivers (which are also addressed by Ciena's technologies) will become more costly and power-hungry as GPU clusters proliferate. In an example, he said that in a network of 250,000 GPUs, each GPU would require six transceivers to link it to the switch fabric. At a total cost of $6,000 per GPU and power consumption of 180 watts per GPU, the costs in money and power quickly stack up.

NVIDIA CEO Jensen Huang untangles optical-to-electrical transceiver cables during his recent GTC keynote. Source: NVIDIA

Huang followed up by announcing new versions of NVIDIA’s Quantum InfiniBand and Spectrum-X switches based on CPO.

In a press release, NVIDIA claims its new CPO switches will deliver “3.5x more power efficiency, 63x greater signal integrity, 10x better network resiliency at scale and 1.3x faster deployment compared with traditional methods.”

Still, it will take awhile for the new liquid-cooled switches to emerge in hyperscaler networks. The Quantum InfiniBand version is due later this year, but the Spectrum-X Ethernet version won't be out until 2026. Clearly, it will take time for these innovations to appear in enterprise networks.

Still, NVIDIA isn’t alone in looking to CPO’s future in AI networking. At OFC in March 2024, Broadcom announced the Bailly 51.2T Ethernet CPO switch system, which packages eight silicon photonics-based, 6.4-Tb/s optical engines with Broadcom’s Tomahawk 5 switch chip. The “Bailly” has been in development with a number of suppliers, including Micas Networks, whose CPO switch was released in March 2025. The Micas product features 128 ports of 400-Gb/s fiber connectivity in a 4U, air-cooled system.

Broadcom announced a flurry of other innovations this week, including a demonstration of optical connectivity for PCIe Gen6 scale-up interconnection. More laser and digital signal processor (DSP) designs for future use in AI networks are also in Broadcom’s showcase.

Nokia also has its hand in the AI datacenter pie. Subsequent to its acquisition of Infinera for $2.3 billion in February, the vendor is exhibiting its Intra-Data Center Connectivity Solutions, developed by Infinera. These pluggable coherent optical transceivers, Nokia says, operate at 1.6 Tb/s but can reduce power consumption for this kind of speed by as much as 70%.

Futuriom Take: While it may take a while, the incredible scaling of AI networks has vendors preparing innovations that use optical technology to solve power, space, and speed challenges that come with ever-larger networks. Initially targeted to the largest hyperscaler AI networks, these techniques are apt to filter into enterprise networks over time.