NVIDIA's CEO Talks Up AI Factories

NVIDIA CEO Jensen Huang announced the vendor’s roadmap yesterday in an extensive keynote highlighting how NVIDIA is meeting growing requirements for AI processing while keeping an eye on future trends. Still, though Huang was on top of his game, inflated expectations of NVIDIA continue to haunt the stock, which is digesting an enormous run of 10X gains over just a few years.

Huang loves math, and he particularly likes applying it to NVIDIA’s products and services. His keynote was replete with numerical examples of how his company’s new GPUs will keep pace with the need for more compute capacity while factoring in the attendant power requirements. At one point, he noted that reasoning models, or agentic AIs, will require 100 times more computational power than was anticipated just last year.

Tokens and More Tokens Required

One of Huang’s foundational messages was that because AI generates tokens, or what one NVIDIA blogger calls “tiny units of data that come from breaking down bigger chunks of information,” AI will continue to grow demand for greater and greater GPU horsepower, particularly as inferencing takes hold.

“Almost the entire world got it wrong,” Huang said in a veiled reference to the reaction to DeepSeek’s touted need for fewer GPUs to produce its models. “The computational requirement, the scaling law of AI, is more resilient, and in fact hyperaccelerated,” he said.

AI Factories Booming

In this vein, the keynote focused on AI factories, or datacenters dedicated specifically to generating AI as quickly as possible in as high a volume as possible. By Huang’s reckoning, buildouts of AI factories will be a $1 trillion market worldwide “very soon.”

Jensen Huang speaking at GTC 2025. Source: NVIDIA

Key to supporting this buildout is NVIDIA’s Blackwell architecture. “Blackwell is in full production!” Huang said. He noted that in 2024, the peak year for its Hopper series, the top four cloud service providers (AWS, Azure, GCP, and OCI) bought 1.3 million GPUs, whereas so far this year the same group has already bought 1.8 million Blackwell GPU packages—containing 3.6 million distinct GPU dies. Indeed, Huang spoke ill of Hopper, claiming it was outdated for today’s AI needs and that as Blackwell production ramps, “You can’t give Hopper away.”

Bigger and Better Chips

Enter Blackwell Ultra, a new configuration billed as an “AI factory platform.” NVIDIA says this new product series substantially outstrips preceding Hopper-based solutions by every measure, making it capable of supporting agentic AI, or reasoning models, and physical AI, including robotics. It works with NVIDIA’s Spectrum-X Ethernet and Quantum-X InfiniBand switches, which now support 800-Gbit/s of throughput per GPU. And the Blackwell Ultra’s liquid-cooled approach reduces its power consumption, a significant factor in AI factory buildouts.

Blackwell Ultra is augmented by a new NVIDIA Dynamo, described as an open-source operating system that orchestrates and accelerates how large language models (LLMs) are used for various processing phases across multiple groups of GPUs. According to a press release, “This allows each phase to be optimized independently for its specific needs and ensures maximum GPU resource utilization.”

Blackwell Ultra-based products are expected to be available from partners starting from the second half of 2025 from a fleet of providers, including Cisco, Dell, HPE, Lenovo, and Supermicro, to name a few.

Jensen Huang and HPE CEO Antonio Neri at GTC. Photo by R. Scott Raynovich

A Disappointing Roadmap?

Huang elaborated on the future roadmap of NVIDIA chips, including Rubin, a GPU due out in 2026, and a follow-on Rubin Ultra with greater functionality in 2027. A later architecture based on chips named Feynman is expected in 2028. Rubin will double the data throughput of Blackwell GPUs. To accompany Rubin, a new Vera CPU will double the performance of the Grace CPU used in the Blackwell configuration, NVIDIA says. The Feynman GPU architecture will also incorporate Vera CPUs.

At least one financial analyst was disappointed with this progression. “A shift in Rubin roadmap vs expectations with Rubin more incremental using the same NVL72 rack and Rubin Ultra now the bigger update (4x reticle),” wrote Blayne Curtis of Jefferies in a follow-up note. “Rubin Ultra will have 16 stacks of HBM4e memory, up from 12 stacks of HBM4 prior. Heading into GTC, most thought Rubin would be a 4x reticle but it appears that transition is pushed to Rubin Ultra.”

Apparently, other investors shared Curtis's take. After the keynote, NVIDIA shares fell over 3%, though they climbed by less than 1% in early trading today.

Co-Packaged Optical Switches Debut

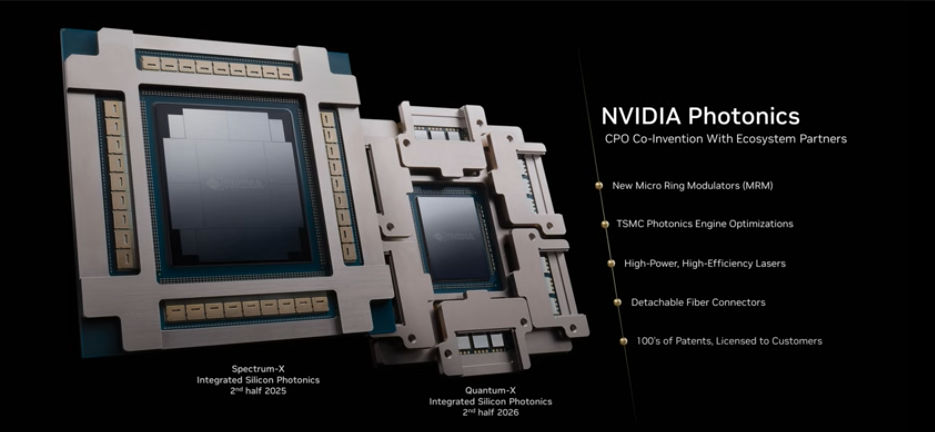

During the mathematically-themed firehose of Huang’s keynote, an expected but interesting announcement surfaced: Switches based on co-packaged optics are on the way. Co-packaged optics (CPO) technology eliminates the need for distinct optical transceivers by moving optical components right into the switch next to the switch ASIC. The result is faster and cheaper performance in AI workloads.

NVIDIA CPO switches. Source: NVIDIA

NVIDIA has introduced two versions of its CPO switches, one for its Spectrum-X Ethernet and another for its Quantum-X InfiniBand lineup. The Ethernet version will be available in 2026, though the InfiniBand version will be available later in 2025. Following are two descriptions from NVIDIA:

“The Spectrum-X Ethernet networking platform delivers superior performance and 1.6x bandwidth density compared with traditional Ethernet for multi-tenant, hyperscale AI factories, including the largest supercomputer in the world.

“NVIDIA Spectrum-X Photonics switches include multiple configurations, including 128 ports of 800Gb/s or 512 ports of 200Gb/s, delivering 100Tb/s total bandwidth, as well as 512 ports of 800Gb/s or 2,048 ports of 200Gb/s, for a total throughput of 400Tb/s.”

And for Quantum-X:

“NVIDIA Quantum-X Photonics switches provide 144 ports of 800Gb/s InfiniBand based on 200Gb/s SerDes and use a liquid-cooled design to efficiently cool the onboard silicon photonics. NVIDIA Quantum-X Photonics switches offer 2x faster speeds and 5x higher scalability for AI compute fabrics compared with the previous generation.”

It’s interesting to note that throughout its press materials, NVIDIA mentions its Ethernet products first, before its InfiniBand ones. Clearly, the vendor is seeing the rising demand for Ethernet. At one point, Huang mentioned that NVIDIA’s goal has been to make Ethernet more like InfiniBand, thereby making the network more performant but easier to use and manage.

Robots on the March

There were many more announcements at this week’s GTC, but robots in factories and automative applications were especially highlighted. In one announcement, NVIDIA said that industrial software providers worldwide are using the Omniverse digital twin platform and attendant Cosmos "world foundation models" with their solutions to accelerate the use of industrial robotics. NVIDIA has released a new Omniverse Blueprint for factory design and a GROOT Blueprint for synthetic motion generation.

During the robot presentation, a small, dog-like robot named Blue emerged seemingly miraculously from a video, where it was marching across a Mars-like desert, onto the stage next to Jensen. As the keynote closed and the robot disappeared, we could hear Huang’s final comments: “Hey Blue, let’s go home…Thank you, I love you too!”

Blue. Source: NVIDIA

Futuriom Take: Ever the showman, NVIDIA CEO Jensen Huang was at the top of his game at the GTC keynote Tuesday. Still, investors expected more fireworks and remain disappointed with the roadmap's timing. Realistically, though, NVIDIA's innovations continue to shine and indicate the vendor is well positioned to tap the ongoing rise of AI.