HPE Releases First Iteration of Private Cloud AI

Hewlett Packard Enterprise (HPE) has delivered the first in a series of planned products jointly developed with NVIDIA and announced at its HPE Discover event last June. And it looks like a win for HPE.

HPE Private Cloud AI was released September 5 with a debut “solution accelerator” based on NVIDIA’s Inference Microservices (NVIDIA NIMs) software. This first iteration is characterized as a “GenAI virtual assistant” to help enterprise developers create interactive chatbots for multiple applications, including tech support, sales, marketing content creation, and the like.

The solution accelerator is the first of a broader series of products planned under the umbrella of NVIDIA AI Computing by HPE, comprising a full-stack integration of NVIDIA’s foundational enterprise AI software, hardware, and networking with HPE’s storage, servers, and GreenLake software. The goal is a system that incorporates both vendors' enterprise AI enablers in a turnkey fashion meant to deliver AI help within “seconds,” according to HPE.

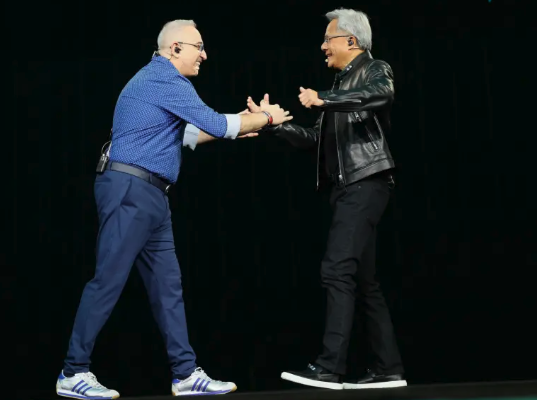

HPE CEO Antonio Neri greets NVIDIA CEO Jensen Huang at HPE's Discover conference in June. Source: HPE

While this solution accelerator will generate text-only chatbots, future accelerators will generate voice and images and will support multi-agent AI, HPE said. That multi-agent capability is a trend in GenAI, allowing multiple intelligent AI agents to interact with one another to solve problems.

In the future, HPE said it will add solution accelerators tailored to fit specific vertical markets, such as financial services, healthcare, retail, energy, and the public sector. Other solution accelerators will incorporate NVIDIA NIM Agent Blueprints, which are recently announced packages of software containing a full roster of elements to help enterprises generate AI applications, including sample applications, reference code, and documentation.

Private AI Is Key

HPE Private Cloud AI’s first solution accelerator is designed to use an open-source large language model (LLM) in conjunction with private data loaded into a vector database to create the chatbots. To ensure private AI, HPE Private Cloud AI is deployed on premises with numerous guardrails for data and AI workloads.

The emphasis on private AI is key. HPE anticipated the importance to enterprise customers of keeping their data safe while developing AI, and the vendor’s solutions are aimed at protecting customer data by ensuring it is guardrailed and protected from the public Internet within the GreenLake platform.

A Choice of Turnkey Configurations

As noted, the new HPE Private Cloud AI can work with a variety of hardware configurations that include rackmounted combinations of HP ProLiant or Cray servers, GreenLake storage, and NVIDIA GPUs, NVIDIA AI Enterprise software, and Spectrum-X Ethernet networking products. The solution accelerators work within HPE’s GreenLake hybrid cloud platform.

While all this sounds like a lot of products and platforms, HPE and NVIDIA have integrated them sufficiently to make it easy for customers to avoid the complexities and get right to AI development. As CEO Antonio Neri put it during the company’s recent earnings call:

“With three clicks and less than 30 seconds to deploy, HPE Private Cloud AI dramatically simplifies DevOps, ITOps, and FinOps for enterprise customers, allowing them to easily establish and meter their environments; monitor and observe their infrastructure and applications; and lifecycle manage all aspects of the AI system."

Turnkey solutions come in a variety of sizes from small to “extra large,” and configurations are supported by a series of system integrators as well as by joint sales and support from NVIDIA and HPE.

In addition to the option to buy turnkey systems, customers also have a choice to adopt HPE Private Cloud AI as an on-prem service.

Helping Users Help Themselves

Both HPE and NVIDIA are squarely focused on streamlining enterprise customers’ path to inference, GenAI applications, Retrieval Augmented Generation (RAG), and other AI workloads. Initially, HPE is offering HPE Private Cloud AI as a suggested solution for AI in healthcare, retail, manufacturing, public safety, telco, and financial services. Getting customers in those verticals and others will be vital to making HPE Private Cloud AI a success.

But there seems to be good reason for optimism. On the recent earnings call, CEO Antonio Neri said:

“With the series of announcements about HPE Private Cloud AI, we are well positioned to serve our enterprise customers’ needs. Since the announcement less than three months ago, we have seen very high customer interest, with requests for proof-of-concept demos exceeding our expectations. We are increasing sales resources and enabling our partner ecosystem to meet the high demand for demos.”

Sales of HPE Private Cloud AI will be reported as part of the company’s Hybrid Cloud segment, which could use the help. For its Q3 earnings report, HPE posted Hybrid Cloud revenues of $1.3 billion, up 4% sequentially but down 7% year-over-year.

Still, the company has high hopes for its new product line. “We believe HPE Private Cloud AI is going to be an important growth driver for our Hybrid Cloud business,” said Neri on the earnings call last week.

Futuriom Take: By offering turnkey AI development in the context of a private AI offering, HPE is ensuring enterprise data stays secure while enabling companies to streamline AI development. This is a major move for HPE that should improve sales across hardware, software, and services.