MinIO Adds AI Functions to Object Storage

MinIO has taken a major step toward moving object storage to the forefront of AI workloads. AIStor, a recently announced release of its main storage platform, incorporates a raft of new functions and features designed to bring object storage into the “exabyte class” of enterprise deployments.

“We are in a different phase when it comes to data and data infrastructure. The challenges are fundamentally distinct from what the enterprise is accustomed to,” stated Ugur Tigli, CTO of MinIO, in a blog post. “The reasons are scale and the parallel requirement to deliver performance at scale.”

In response to these needs, AIStor includes a new API that extracts information from unstructured data using natural language prompts; a private repository for AI models that’s compatible with Hugging Face; an overhauled user interface designed for easy access to MinIO functions by IT personnel as well as developers; and S3 over RDMA for optimal delivery of data in AI workflows.

Object Storage in the Exabyte Realm

In the past, object storage had a reputation for being too slow for transaction processing and hence too slow for AI workloads, where storage must have throughput capable of keeping up with the parallel processing rates of expensive GPUs. If storage is too slow to deliver, those GPUs can sit idle, a disaster for ROI.

Those days are gone, according to MinIO. The vendor maintains that the public cloud hyperscalers all realized awhile back that the only way to scale data delivery for AI was with object storage, which provides a flat file architecture for secure, easy distribution and access to data.

But there are challenges with exascale object storage. MinIO’s experience with large-scale environments paid off here, Tigli said in his blog:

“MinIO made the choice to invest in these AI workloads because we knew MinIO could scale. We were still surprised at how challenges emerged at 1 EiB - and in places we didn’t think about. Places like memory, networking, replication and load balancing. Because we had the right foundation, we have been able to quickly overcome these challenges and allow our customers to continue to grow their data infrastructure unabated.”

Adding AI to the Object Store

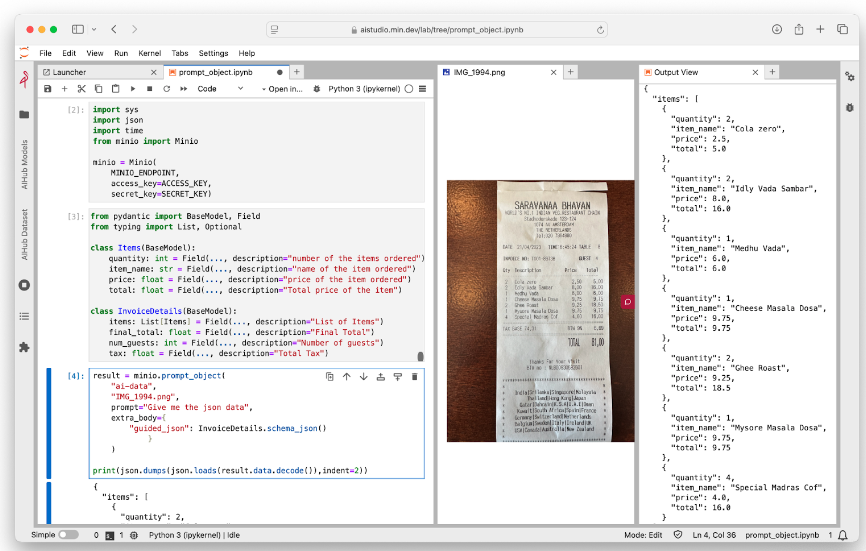

MinIO’s AIStor facilitates AI in several ways. One is promptObject, a new S3 API that queries unstructured data storage directly, delivering answers to queries in natural language text as well as in programmatic language for developers.

“At the most basic level, the MinIO promptObject API lets users or applications talk to unstructured objects as if they were talking to an LLM,” stated MinIO engineer Dileeshvar Radhakrishnan and CMO Jonathan Symonds in a blog post. “That means you can ask an object to describe itself, to find similarities with other objects and to find differences with other objects. It will lead to an explosion of applications that can speak directly to the data residing on MinIO.” The authors note that no expertise with RAG models or vector databases is required; everything is taken care of under the covers by AIStor.

A promptObject view. Source: MinIO

In addition to promptObject, MinIO has equipped AIStor with AIHub, a private cloud version of Hugging Face, the popular model repository. This is designed for enterprises deploying private AI, a trend that is taking hold among large enterprises concerned with maintaining security in AI processes.

Notably, though MinIO can run within popular clouds such as AWS, Azure, and GCP, AIHub is suited for its on-premises implementations, in which AIStor, like MinIO”s previous object storage solutions, utilizes storage servers from HPE, Dell, and Supermicro.

Faster and Easier to Manage

The new release also improves data throughput: AIStor supports data transmission via S3 over Remote Direct Memory Access (RDMA), a technique that delivers data from one machine’s memory to another’s, bypassing the CPU and increasing throughput to match the high speeds supported by GPU clusters, including 400- and 800-Gb/s Ethernet. In the past, RDMA was used with NVIDIA’s InfiniBand, but it’s been adapted to Ethernet via RDMA over Converged Ethernet (RoCE), which is supported in AIStor.

AIStor also features a new Global Console, which MinIO says has been redesigned to facilitate easy access to the vendor's multiple security functions, including object immutability, identity and access management, and encryption; along with load balancing, caching, replication, and orchestration. MinIO has also added Kubernetes to aid the management of environments with “hundreds of servers and tens of thousands of drives.”

MinIO isn’t alone in seeking a prime spot in AI workloads for its object management platform. Competitor Cloudian recently released a version of its HyperStore system that supports NVIDIA’s GPUDirect Storage technology (which supports direct linkage from storage to GPU memory) and RDMA. And vendors of parallel file systems, including CTERA, Panzura, Qumulo, and Weka (among others), claim to support high-throughput links between object storage and GPUs, even without GPUDirect.

Futuriom Take: MinIO’s AIStor brings AI-ready features and high-speed performance to its object storage platform for exabyte-scale environments. The move is part of a general trend toward bringing object storage into close alignment with the needs of AI workloads.