An Overview of Networking for AI at the Edge

(Editor's Note: This is a preview of some of the material from our upcoming Networking Infrastructure for AI report, to be published in late May. If you have editorial questions or questions about sponsorship, please contact us here.)

Networking for AI doesn’t happen only in AI factories of GPU clusters. It is also shifting to the network edge. It’s at the edge that many AI applications will run in the form of independent intelligent agents or inference engines. Examples include sensors, robots, and controllers used in manufacturing environments; automated responses to retail shoppers’ mobile phone voice requests; and oil and gas monitoring.

Networking is extended to the AI edge in several ways. The public cloud hyperscalers are building out their global networks, on which they provide instances of compute and storage resources for AI workloads. Content delivery network (CDN) providers are also extending their services with compute and storage features added to support AI. And in what may be the most significant trend, a basket of technologies extend AI to the edge via software-defined networking and related techniques.

Let’s take a closer look.

Hyperscalers Extend Connectivity and Boost Services

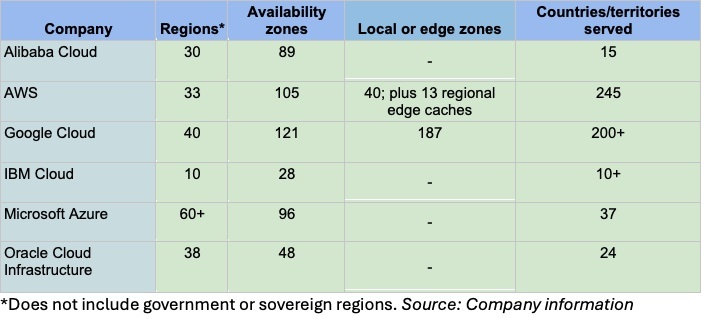

As noted, the cloud hyperscalers offer their own networked facilities worldwide, which include regional connections and points of presence (PoPs) globally (see chart below). These connections are terminating in instances of compute and storage to facilitate AI processing.

AWS offers AWS Wavelength and AWS Outposts functionality, which bring AWS cloud services to the telco edge over the AWS network or to the customer premises, respectively.

Google Cloud recently announced new features for its existing Google Distributed Cloud (GDC). That platform comprises hardware and software linked over Google Cloud’s network -- or set up in a customer’s datacenter to support AI processing at the edge.

Microsoft's Azure Stack series brings Azure AI services to the edge of the cloud provider's network connections or to the customer’s datacenter. Options include Azure Stack Edge, which works with a hardware appliance optimized with accelerated computing to run machine learning or AI workloads at a remote edge site over Azure’s network.

CDN Providers Support AI at the Edge

Providers of content delivery networks, including the hyperscalers who have their own CDNs, are attempting to support GenAI at the edge.

Akamai, for instance, has outfitted selected regions worldwide with compute resources embedded with virtual machines at the edge of network connections. Called Generalized Edge Compute (Gecko), the service uses technology leveraged from Akamai’s purchase of infrastructure-as-a-service provider Linode in 2022. There are presently about 10 Gecko locations worldwide, with an additional 75 in the works for delivery this year. Gecko depends on Akamai’s 4,200 worldwide points of presence to deliver the goods to the edge.

Cloudflare has equipped its worldwide datacenters with GPUs, so that the vendor's network can extend inference services to enterprise customers via its CDN. Cloudflare has partnered with Hugging Face to bring a range of open-source models to its Workers AI machine-learning platform for inferencing.

Fermyon is a WebAssembly (Wasm) developer that created Spin, an open-source tool for software engineers, and Fermyon Cloud, a premium cloud service aimed at larger enterprises. Thanks to its ability to shrink the amount of code required to run cloud applications on the Web, Fermyon's Wasm could be used to run AI in distributed Internet of Things (IoT) environments or in cloud infrastructure supporting edge data analytics and AI. Fermyon recently announced a Kubernetes platform for WebAssembly, which increases the density of Wasm coding by 50X, improving the technology’s ability to support AI.

More Approaches to AI Networking at the Edge

A basket of products and services designed for edge infrastructure are based on technologies that build a logical, software-defined, secure network for cloud applications across multiple private clouds, datacenters, and public clouds. This infrastructure will be suited for a variety of applications, including AI, because it will enable secure, low-latency connectivity.

These solutions combine application layer and network layer functions to eliminate the complexity of traditional routed networks while ensuring lower latency, higher throughput, and better security and observability. These features allow edge applications and devices to securely and efficiently process and deliver information, including AI inferencing.

Arrcus, for instance, offers Arrcus Connected Edge for AI (ACE-AI), which deploys the vendor’s distributed, cloud-native network operating system – ArcOS – to help build networks on the fly that are optimized for AI workloads at the edge. ACE-AI deploys Ethernet along with its virtualized distributed routing (VDR), priority flow control (PFC), intelligent buffering and congestion control, and visibility functions to deliver edge services that are hardware-agnostic and will work with white-box switches and routers.

Aviatrix is a multicloud networking platform that allows network managers and cloud engineers to build a distributed virtual fabric from edge to cloud. This includes an enterprise-grade secure cloud network, backbone, and edge for mission-critical applications. The goal is to deliver a simplified and consistent networking architecture in and across cloud service providers.

F5 offers an application-layer networking solution that eliminates the need for MPLS or VPNs and extends to multicloud and hybrid cloud networks via APIs. The vendor stresses security in its solutions, and it’s partnered with identity management vendors, including Microsoft, for identity-based security, to which it’s added user and entity behavior analytics (UEBA). In addition to the zero-trust connectivity, F5’s services include load balancing and acceleration for links to edge applications.

Graphiant offers application-level networking that will be helpful for AI via the Graphiant Network Edge, which deploys a “stateless core routing approach” that works like a router and firewall in one, without the need to encrypt and decrypt packets. Instead, it establishes routing as a core function in the cloud, which in turn tags remote devices with packet instructions. All of this is provided as-a-service, which streamlines traffic to and from the network edge.

Prosimo offers secure mesh networking between clouds. The platform creates a secure, multi-cloud transit network that links to multiple edge locations. The network also supports microsegmentation at the application level, making for even more secure edge access.

Vapor IO offers AI-as-a-service service called Zero Gap AI that deploys Supermicro servers equipped with NVIDIA’s MGX platform and GH200 Grace Hopper Superchips, connected via Vapor IO’s Kinetic Grid platform, a fiber-based network augmented with optical components. This Kinetic Grid is based on multiple “micro modular data centers” with edge-to-edge networking that brings AI close to specific customer locations. Zero Gap AI is currently available in 36 U.S. cities.

ZEDEDA specializes in orchestrating devices and applications associated with edge nodes by providing only the operating system needed to run the application at the edge; there are no runtimes or libraries to slow things down. ZEDEDA also works via its own API with Kubernetes, Docker, and virtual machines to speed up edge applications and reduce operational costs – all of which play into more efficient networking of AI inferencing.

Zentera Systems decouples security from network topology, running an overlay application network with full packet visibility to protect AI workloads end to end throughout the network. “We tunnel packets between the application and network layers,” said Michael Ichiriu, VP of marketing and product at Zentera. An orchestrator runs policies for the edge-to-AI platform the firm provides.

SONiC for AI Networking at the Edge

Software for Open Networking in the Cloud (SONiC) is an open source network operating system (NOS) based on Linux, launched by Microsoft in 2016. In 2022, it was transferred to the Linux Foundation to expand its potential as a community project. Because it is simple, easy to scale, supports multi-tenancy, and is agnostic to hardware, SONiC has gained in popularity as a means of extending networking to the edge. It is expected to play a significantly role in delivering data and processing at the edge, including for AI applications. But to serve the edge effectively, SONiC requires intelligent management functions, which a range of vendors have been working to provide.

Aviz Networks has built the Open Networking Enterprise Suite (ONES), a multivendor networking stack for SONiC, enabling datacenters and edge networks to deploy and operate SONiC regardless of the underlying ASIC, switching, or the type of SONiC. It also incorporates NVIDIA Cumulus Linux, Arista EOS, or Cisco NX-OS into its SONiC network. The platform monitors network traffic, bandwidth utilization, and system health, along with other functions and characteristics throughout all areas of the network fabric, including the edge.

Hedgehog is a cloud-native software company with a view to improving and extending SONiC to enable compute work at the network edge. The software operates on a range of hardware switches, smartNICs, and DPUs from Celestica, Dell, Edgecore Networks, NVIDIA, and Wistron. It features a guided setup option to establish an enterprise network via this range of devices, which can then be programmed with Terraform, Ansible, or the Kubernetes API.

Futuriom Take: Networking is being extended to the edge and augmented with resources that enable AI training and inferencing in distributed networks. Especially significant are services that use upper-layer techniques and virtual routing to connect, secure, and control AI traffic to edge locations.